There’s nothing people love more in sports than the appearance of “clutch”ness, probably because the ability to play “up” to a situation implies a sort of super-humanity, and we love our super-heroes. Prior to this last weekend, Tim Tebow had a remarkable streak of games in which he (and his team) played significantly better in crucial 4th-quarter situations than he (or they) did throughout the rest of those contests. Combined with Tebow’s high profile, his extremely public religious conviction, and a “divine intervention” narrative that practically wrote itself, this led to a perfect storm of hype. With the din of that hype dying down a bit (thank you, Bill Belichick), I thought I’d take the chance to explore a few of my thoughts on “clutchness” in general.

This may be a bit of a surprise coming from a statistically-oriented self-professed skeptic, but I’m a complete believer in “clutch.” In this case, my skepticism is aimed more at those who deny clutch out of hand: The principle that “Clutch does not exist” is treated as something of a sacred tenet by many adherents of the Unconventional Wisdom.

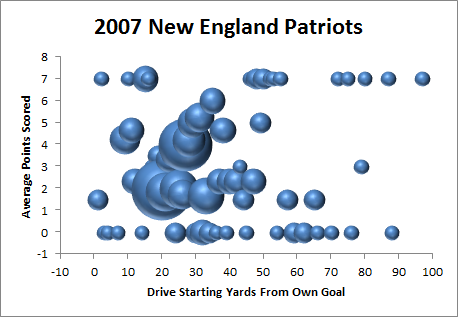

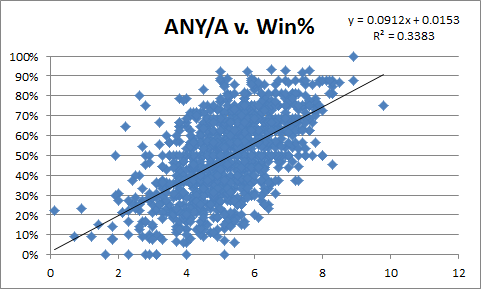

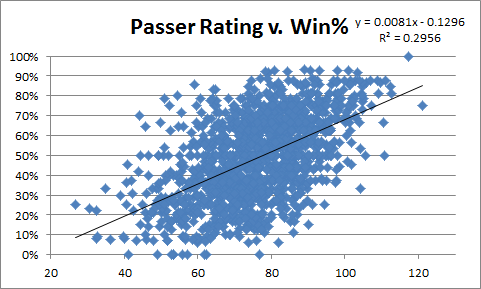

On the other hand, my belief in Clutch doesn’t necessarily mean I believe in mystical athletic superpowers. Rather, I think the “clutch” effect—that is, scenarios where the performance of some teams/players genuinely improves when game outcomes are in the balance—is perfectly rational and empirically supported. Indeed, the simple fact that winning is a statistically significant predictive variable on top of points scored and points allowed—demonstrably true for each of the 3 major American sports—is very nearly proof enough.

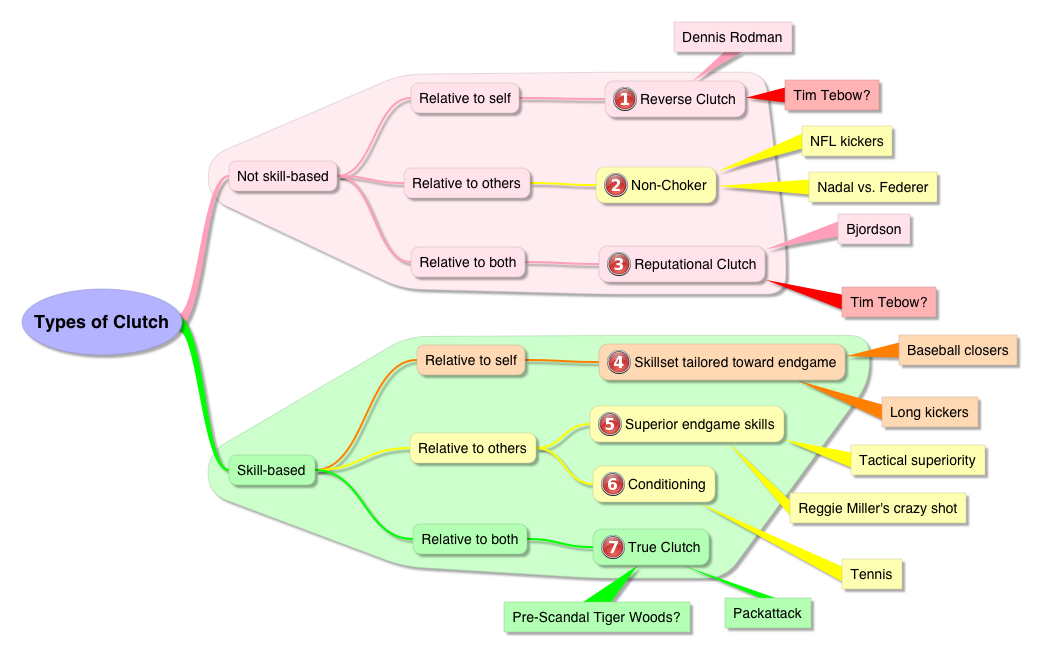

The differences between my views and those of clutch-deniers are sometimes more semantic and sometimes more empirical. In its broadest sense, I would describe “clutch” as a property inherent in players/teams/coaches who systematically perform better than normal in more important situations. From there, I see two major factors that divide clutch into a number of different types: 1) Whether or not the difference is a product of the individual or team’s own skill, and 2) whether their performance in these important spots is abnormally good relative to their performance (in less important spots), whether it is good relative to the typical performance in those spots, or both. In the following chart, I’ve listed the most common types of Clutch that I can think of, a couple of examples of each, and how I think they break down w/r/t those factors (click to enlarge):

Here are a few thoughts on each:

1. Reverse Clutch

I first discussed the concept of “reverse clutch” in this post in my Dennis Rodman series. Put simply, it’s a situation where someone has clutch-like performance by virtue of playing badly in less important situations.

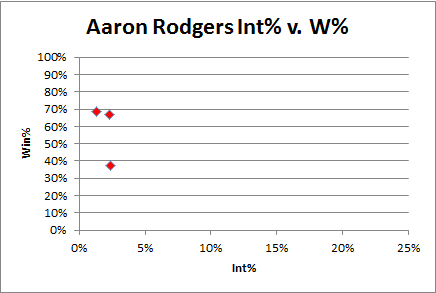

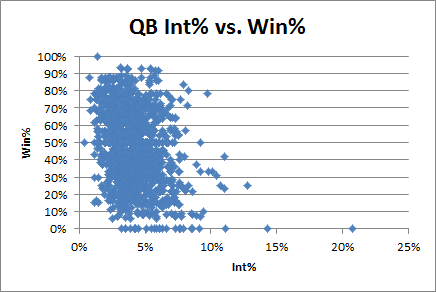

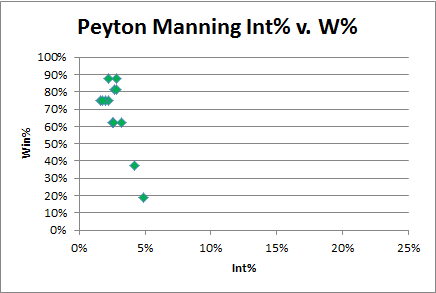

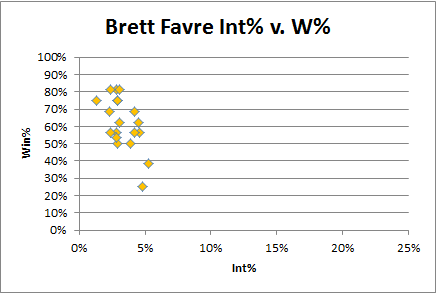

While I don’t think this is a particularly common phenomenon, it may be relevant to the Tebow discussion. During Sunday’s Broncos/Pats game, I tweeted that at least one commentator seemed to be flirting with the idea that maybe Tebow would be better off throwing more interceptions. Noting that, for all of Tebow’s statistical shortcomings, his interception rate is ridiculously low, and then noting that Tebow’s “ugly” passes generally err on the ultra-cautious side, the commentator seemed poised to put the two together—if just for a moment—before his partner steered him back to the mass media-approved narrative.

If you’re not willing to take the risks that sometimes lead to interceptions, you may also have a harder time completing passes, throwing touchdowns, and doing all those things that quarterbacks normally do to win games. And, for the most part, we know that Tebow is almost religiously (pun intended) committed to avoiding turnovers. However, in situations where your team is trailing in the 4th quarter, you may have no choice but to let loose and take those risks. Thus, it is possible that a Tim Tebow who takes risks more optimally is actually a significantly better quarterback than the Q1-Q3 version we’ve seen so far this season, and the 4th quarter pressure situations he has faced have simply brought that out of him.

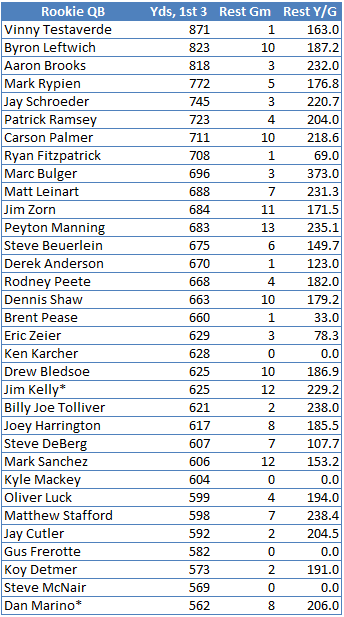

That may sound farfetched, and I certainly wouldn’t bet my life on it, but it also wouldn’t be unprecedented. Though perhaps a less extreme example, early in his career Ben Roethlisburger played on a Pittsburgh team that relied mostly on its defense, and was almost painfully conservative in the passing game. He won a ton, but with superficially unimpressive stats, a fairly low interception rate, and loads of “clutch” performances. His rookie season he passed for only 187 yards a game, yet had SIX 4th quarter comebacks. Obviously, he eventually became regarded as an elite QB, with statistics to match.

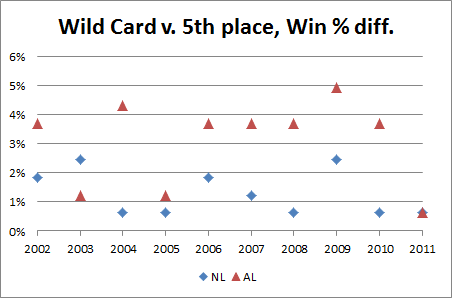

2. Not Choking

A lot of professional athletes are *not* clutch, or, more specifically, are anti-clutch. See, e.g., professional kickers. They succumb under pressure, just as any non-professionals might. While most professionals probably have a much greater capacity for handling pressure situations than amateurs, there are still significant relative imbalances between them. The athletes who do NOT choke under pressure are thus, by comparison, clutch.

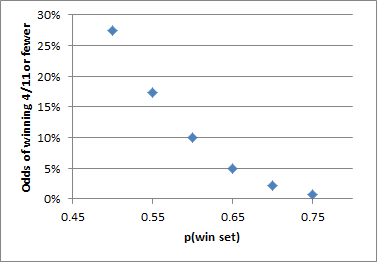

Some athletes may be more “mentally tough” than others. I love Roger Federer, and think he is among the top two tennis player of all time (Bjorn Borg being the other), and in many ways I even think he is under-appreciated despite all of his accolades. Yet, he has a pretty crap record in the closest matches, especially late in majors: lifetime, he is 4-7 in 5 set matches in the Quarterfinals or later, including a 2-4 record in his last 6. For comparison, Nadal is 4-1 in similar situations (2-1 against Federer), and Borg won 5-setters at an 86% clip.

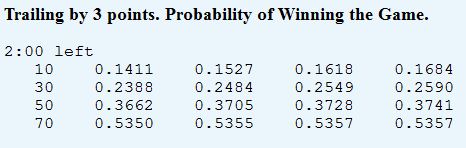

Extremely small sample, sure. But compared to Federer’s normal expectation on a set by set basis over the time-frame (even against tougher competition), the binomial probability of him losing that much without significantly diminished 5th set performance is extremely low:

Thus, as a Bayesian matter, it’s likely that a portion of Rafael Nadal’s apparent “clutchness” can be attributed to Roger Federer.

3. Reputational Clutch.

In the finale to my Rodman series, I discussed a fictional player named “Bjordson,” who is my amalgamation of Michael Jordan, Larry Bird, and Magic Johnson, and I noted that this player has a slightly higher Win % differential than Rodman.

Now, I could do a whole separate post (if not a whole separate series) on the issue, but it’s interesting that Bjordson also has an extremely high X-Factor: that is, the average difference between their actual Win % differential and the Win % differential that would be predicted by their Margin of Victory differential is, like Rodman’s, around 10% (around 22.5% vs. 12.5%). [Note: Though the X-Factors are similar, this is subjectively a bit less surprising than Rodman having such a high W% diff., mostly because I started with W% diff. this time, so some regression to the mean was expected, while in Rodman’s case I started with MOV, so a massively higher W% was a shocker. But regardless, both results are abnormally high.]

Now, I’m sure that the vast majority of sports fans presented with this fact would probably just shrug and accept that Jordan, Bird and Johnson must have all been uber-clutch, but I doubt it. Systematically performing super-humanly better than you are normally capable of is extremely difficult, but systematically performing worse than you are normally capable of is pretty easy. Rodman’s high X-Factor was relatively easy to understand (as Reverse Clutch), but these are a little trickier.

Call it speculation, but I suspect that a major reason for this apparent clutchiness is that being a super-duper-star has its privileges. E.g.:

In other words, ref bias may help super-stars win even more than their super-skills would dictate.

I put Tim Tebow in the chart above as perhaps having a bit of “reputational clutch” as well, though not because of officiating. Mostly it just seemed that, over the last few weeks, the Tebow media frenzy led to an environment where practically everyone on the field was going out of their minds—one way or the other—any time a game got close late.

4. Skills Relevant to Endgame

Numbers 4 and 5 in the chart above are pretty closely related. The main distinction is that #4 can be role-based and doesn’t necessarily imply any particular advantage. In fact, you could have a relatively poor player overall who, by virtue of their specific skillset, becomes significantly more valuable in endgame situations. E.g., closing pitchers in baseball: someone with a comparatively high ERA might still be a good “closing” option if they throw a high percentage of strikeouts (it doesn’t matter how many home runs you normally give up if a single or even a pop-up will lose the game).

Straddling 4 and 5 is one of the most notorious “clutch” athletes of all time: Reggie Miller. Many years ago, I read an article that examined Reggie’s career and determined that he wasn’t clutch because he hit an relatively normal percentage of 3 point shots in clutch situations. I didn’t even think about it at the time, but I wish I could find the article now, because, if true, it almost certainly proves exactly the opposite of what the authors intended.

The amazing thing about Miller is that his jump shot was so ugly. My theory is that the sheer bizarreness of his shooting motion made his shot extremely hard to defend (think Hideo Nomo in his rookie year). While this didn’t necessarily make him a great shooter under normal circumstances, he could suddenly become extremely valuable in any situations where there is no time to set up a shot and heavy perimeter defense is a given. Being able to hit ANY shots under those conditions is a “clutch” skill.

5. Tactical Superiority (and other endgame skills)

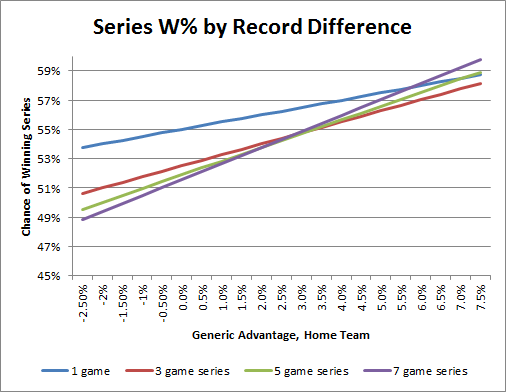

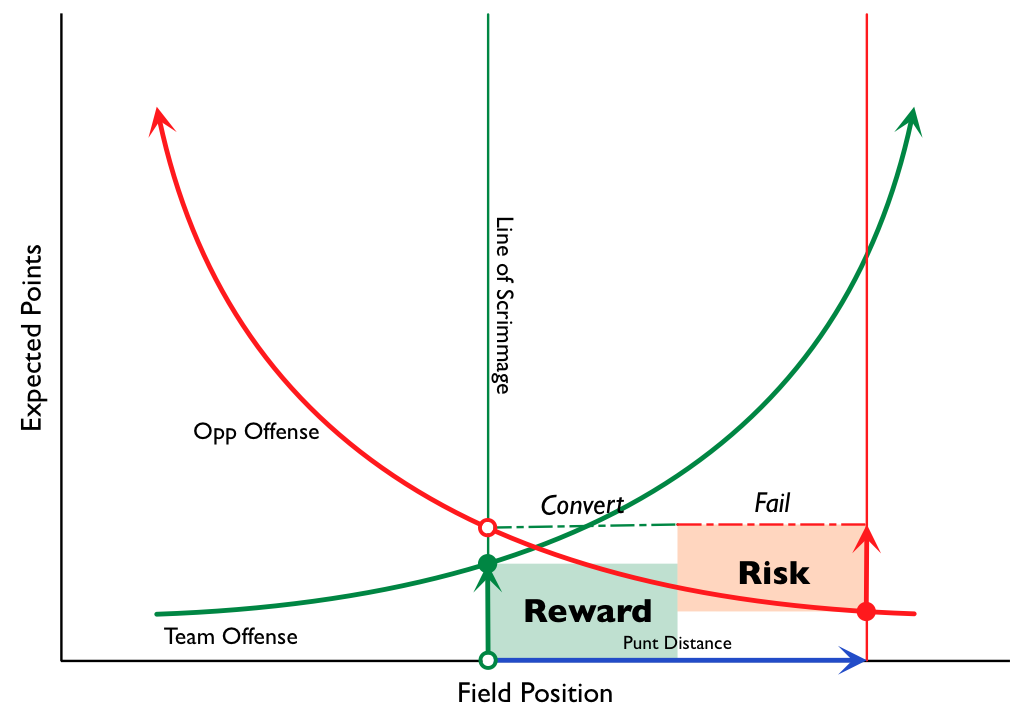

Though other types of skills can fit into this branch of the tree, I think endgame tactics is the area where teams, coaches, and players are most likely to have disparate impacts, thus leading to significant advantages w/r/t winning. The simple fact is that endgames are very different from the rest of games, and require a whole different mindset. Meanwhile, leagues select for people with a wide variety of skills, leaving some much better at end-game tactics than others.

Win expectation supplants point expectation. If you’re behind, you have to take more risks, and if you’re ahead, you have to avoid risks—even at the cost of expected value. If you’re a QB, you need to consider the whole range of outcomes of a play more than just the average outcome or the typical outcome. If you’re a QB who is losing, you need to throw pride out the window and throw interceptions! There is clock management, knowing when to stay in bounds and when to go down. As a baseball manager, you may face your most difficult pitching decisions, and as a pitcher, you may have to make unusual pitch decisions. A batter may have to adjust his style to the situation, and a pitcher needs to anticipate those adjustments. Etc., etc., ad infinitum. They may not be as flashy as Reggie Miller 3-ball, but these little things add up, and are probably the most significant source of Clutchness in sports.

6. Conditioning

I listed this separately (rather than as an example of 4 or 5) just because I think it’s not as simple and neat as it seems.

While conditioning and fitness are important in every sport, and they tend to be more important later in games, they’re almost too pervasive to be “clutch” as I described it above. The fact that most major team sports have more or less uniform game lengths means that conditioning issue should manifest similarly basically every night, and should therefore be reflected in most conventional statistics (like minutes played, margin of victory, etc), not just in those directly related to winning.

Ultimately, I think conditioning has the greatest impact on “clutchness” in Tennis, where it is often the deciding factor in close matches

7. True Clutch.

And finally, we get to the Holy Grail of Clutch. This is probably what most “skeptics” are thinking of when they deny the existence of Clutch, though I think that such denials—even with this more limited scope—are generally overstated. If such a quality exists, it is obviously going to be extremely rare, so the various statistical studies that fail to find it prove very little.

The most likely example in mainstream sports would seem to be pre-scandal Tiger Woods. In his prime, he had an advantage over the field in nearly every aspect of the game, but golf is a fairly high variance sport, and his scoring average was still only a point or two lower than the competition. Yet his Sunday prowess is well documented: He has gone 48-4 in PGA tournaments when entering the final round with at least a share of the lead, including an 11-1 record with only a share of the lead. Also, to go a bit more esoteric, Woods has successfully defended a title 22 times. So, considering he has 71 career wins, and at least 22 of them had to be first timers, that means his title defense record is closer to 40-45%, depending on how often he won titles many times in a row. Compare this to his overall win-rate of 27%, and the idea that he was able to elevate his game when it mattered to him the most is even more plausible.

Of course, I still contend that the most clutch thing I have ever seen is Packattack’s final jump onto the .1 wire in his legendary A11 run. Tim Tebow, eat your heart out!