When I began writing about Dennis Rodman, I was so terrified that I would miss something and the whole argument would come crashing down that I kept pushing it further and further and further, until a piece I initially planned to be about 10 pages of material ended up being more like 150. [BTW, this whole post may be a bit too inside-baseball if you haven’t actually read—or at least skimmed—my original “Case for Dennis Rodman.” If so, that link has a helpful guide.]

The downside of this, I assumed, is that the extra material should open up many angles of attack. It was a conscious trade-off, knowing that individual parts in the argument would be more vulnerable, but the Case as a whole would be thorough and redundant enough to survive any battles I might end up losing.

Ultimately, however, I’ve been a bit disappointed in the critical response. Most reactions I’ve seen have been either extremely complimentary or extremely dismissive.

So a while ago, I decided that if no one really wanted to take on the task, I would do it myself. In one of the Rodman posts, I wrote:

Give me an academic who creates an interesting and meaningful model, and then immediately devotes their best efforts to tearing it apart!

And thus The Case Against the Case for Dennis Rodman is born.

Before starting, here are a few qualifying points:

- I’m not a lawyer, so I have no intention of arguing things I don’t believe. I’m calling this “The Case Against the Case For Dennis Rodman,” because I cannot in good faith (barring some new evidence or argument I am as yet unfamiliar with) write The Case Against Dennis Rodman.

- Similarly, where I think an argument is worth being raised and discussed but ultimately fails, I will make the defense immediately (much like “Objections and Replies”).

- I don’t have an over-arching anti-Case hypothesis to prove, so don’t expect this series to be a systematic takedown of the entire enterprise. Rather, I will point out weaknesses as I consider them, so they may not come in any kind of predictable order.

- If you were paying attention, of course you noticed that The Case For Dennis Rodman was really (or at least concurrently) about demonstrating how player valuation is much more dynamic and complicated than either conventional or unconventional wisdom gives it credit for. But, for now, The Case Against the Case will focus mainly on the Dennis Rodman part.

Ok, so with this mission in mind, let me start with a bit of what’s out there already:

A Not-Completely-Stupid Forum Discussion

I admit, I spend a fair amount of time following back links to my blog. Some of that is just ego-surfing, but I’m also desperate to find worthy counter-arguments.

As I said above, that search is sometimes more fruitless than I would like. Even the more intelligent discussions usually include a lot of uninspired drivel. For example, let’s look at a recent thread on RealGM. After one person lays out a decent (though imperfect) summary of my argument, there are several responses along the lines of poster “SVictor”s:

I won’t pay attention to any study that states that [Rodman might be more valuable than Michael Jordan].

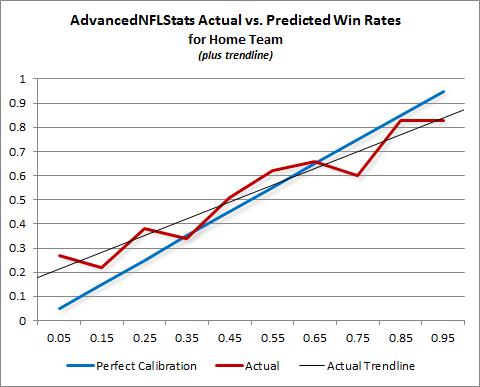

Actually, I’m pretty sympathetic to this kind of objection. There can be a bayesian ring of truth to “that is just absurd on its face” arguments (I once made a similar argument against an advanced NFL stat after it claimed Neil O’Donnell was the best QB in football). However, it’s not really a counter-argument, it’s more a meta-argument, and I think I’ve considered most of those to death. Besides, I don’t actually make the claim in question, I merely suggest it as something worth considering.

A much more detailed and interesting response comes from poster “mysticbb.” Now, he starts out pretty insultingly:

The argumentation is biased, it is pretty obvious, which makes it really sad, because I know how much effort someone has to put into such analysis.

I cannot say affirmatively that I have no biases, or that bias never affects my work. Study after study shows that this is virtually impossible. But I can say that I am completely and fundamentally committed to identifying it and stamping it out wherever I can. So, please—as I asked in my conclusion—please point out where the bias is evident and I will do everything in my power to fix it.

Oddly, though, mysticbb seems to endorse (almost verbatim) the proposition that I set out to prove:

Let me start with saying that Dennis Rodman seems to be underrated by a lot of people. He was a great player and deserved to be in the HOF, I have no doubt about that. He had great impact on the game and really improved his team while playing.

(People get so easily distracted: You write one article about a role-player maybe being better than Michael Jordan, and they forget that your overall claim is more modest.)

Of course, my analysis could just be way off, particularly in ways that favor Rodman. To that end, mysticbb raises several valid points, though with various degrees of significance.

Here he is on Rodman’s rebounding:

Let me start with the rebounding aspect. From 1991 to 1998 Rodman was leading the league in TRB% in each season. He had 17.7 ORB%, 33 DRB% and overall 25.4 TRB%. Those are AWESOME numbers, if we ignore context. Let us take a look at the numbers for the playoffs during the same timespan: 15.9 ORB%, 27.6 DRB% and 21.6 TRB%. Still great numbers, but obviously clearly worse than his regular season numbers. Why? Well, Rodman had the tendency to pad his rebounding stats in the regular season against weaker teams, while ignoring defensive assignments and fighting his teammates for rebounds. All that was eliminated during the playoffs and his numbers took a hit.

Now, I don’t know how much I talked about the playoffs per se, but I definitely discussed—and even argued myself—that Rodman’s rebounding numbers are likely inflated. But I also argued that if that IS the case, it probably means Rodman was even more valuable overall (see that same link for more detail). He continues:

Especially when we look at the defensive rebounding part, during the regular season he is clearly ahead of Duncan or Garnett, but in the playoffs they are all basically tied. Now imagine, Rodman brings his value via rebounding, what does that say about him, if that value is matched by players like Duncan or Garnett who both are also great defenders and obviously clearly better offensive players?

Now, as I noted at the outset Rodman’s career offensive rebounding percentage is approximately equal to Kevin Garnett’s career overall rebounding percentage, so I think Mystic is making a false equivalency based on a few cherry-picked stats.

But, for a moment, let’s assume it were true that Garnett/Duncan had similar rebounding numbers to Rodman, so what? Rodman’s crazy rebounding numbers cohere nicely with the rest of the puzzle as an explanation of why he was so valuable—his absurd rebounding stats make his absurd impact stats more plausible and vice versa—but they’re technically incidental. Indeed, they’re even incidental to his rebounding contribution: The number (or even percent) of rebounds a player gets does not correlate very strongly with the number of rebounds he has actually added to his team (nor does a player’s offensive “production” correlate very strongly with improvement in a team’s offense), and it does so the most on the extremes.

But I give the objection credit in this regard: The playoff/regular season disparity in Rodman’s rebounding numbers (though let’s not overstate the case, Rodman has 3 of the top 4 TRB%’s in playoff history) do serve to highlight how dynamic basketball statistics are. The original Case For Dennis Rodman is perhaps too willing to draw straight causal lines, and that may be worth looking into. Also, a more thorough examination of Rodman’s playoff performance may be in order as well.

On the indirect side of The Case, mysticbb has this to say:

[T]he high difference between the team performance in games with Rodman and without Rodman is also caused by a difference in terms of strength of schedule, HCA and other injured players.

I definitely agree that my crude calculation of Win % differentials does not control for a number of things that could be giving Rodman, or any other player, a boost. Controlling for some of these things is probably possible, if more difficult than you might think. This is certainly an area where I would like to implement some more robust comparison methods (and I’m slowly working on it).

But, ultimately, all of the factors mysticbb mentions are noise. Circumstances vary and lots of things happen when players miss games, and there are a lot of players and a lot of circumstances in the sample that Rodman is compared to: everyone has a chance to get lucky. That chance is reflected in my statistical significance calculations.

Mysticbb makes some assertions about Rodman having a particularly favorable schedule, but cites only the 1997 Bulls, and it’s pretty thin gruel:

If we look at the 12 games with Kukoc instead of Rodman we are getting 11.0 SRS. So, Rodman over Kukoc made about 0.5 points.

Of course, if there is evidence that Rodman was especially lucky over his career, I would like to see it. But, hmm, since I’m working on the Case Against myself, I guess that’s my responsibility as well. Fair enough, I’ll look into it.

Finally, mysticbb argues:

The last point which needs to be considered is the offcourt issues Rodman caused, which effected the outcome of games. Take the 1995 Spurs for example, when Rodman refused to guard Horry on the perimeter leading to multiple open 3pt shots for Horry including the later neck-breaker in game 6. The Spurs one year later without Rodman played as good as in 1995 with him.

I don’t really have much to say on the first part of this. As I noted at the outset, there’s some chance that Rodman caused problems on his team, but I feel completely incompetent to judge that sort of thing. But the other part is interesting: It’s true that the Spurs were only 5% worse in 95-96 than they were in 94-95 (OFC, they would be worse measuring only against games Rodman played in), but cross-season comparisons are obviously tricky, for a number of reasons. And if they did exist, I’m not sure they would break the way suggested. For example, the 2nd Bulls 3-peat teams were about as much better than the first Bulls 3-peat as the first Bulls 3-peat was better than the 93-95 teams that were sans Michael Jordan.

That said, I actually do find multi-season comparisons to be a valid area for exploration. So, e.g., I’ve spent some time looking at rookie impact and how predictive it is of future success (answer: probably more than you think).

Finally, a poster named “parapooper” makes some points that he credits to me, including:

He also admits that Rodman actually has a big advantage in this calculation because he missed probably more games than any other player due to reasons other than health and age.

I don’t actually remember making this point, at least this explicitly, but it is a valid concern IMO. A lot of the In/Out numbers my system generated include seasons where players were old or infirm, which disadvantages them. In fact, I initially tried to excise these seasons, and tried accounting for them in a variety of ways, such as comparing “best periods” to “best periods”, etc. But I found such attempts to be pretty unwieldy and arbitrary, and they shrunk the sample size more than I thought they were worth, without affecting the bottom line: Rodman just comes out on top of a smaller pile. That said, some advantage to Rodman relative to others must exist, and quantifying that advantage is a worthy goal.

A similar problem that “para” didn’t mention specifically is that a number of the in/out periods for players include spots where the player was traded. In subsequent analysis, I’ve confirmed what common sense would probably indicate: A player’s differential stats in trade scenarios are much less reliable. Future versions of the differential comparison should account for this, one way or another.

The differential analysis in the series does seem to be the area that most needs upgrading, though the constant trade-off between more information and higher quality information means it will never be as conclusive as we might want it to be. Not mentioned in this thread (that I saw), but what I will certainly deal with myself, are broader objections to the differential comparisons as an enterprise. So, you know. Stay tuned.