So apparently San Francisco backup wide receiver Randy Moss made some headlines at Super Bowl media day by expressing the opinion that he is the greatest receiver of all time.

Much of the response I’ve seen on Twitter has looked like this:

Randy Moss just pronounced himself the greatest WR of all time… Not even the greatest WR to wear the Niner uni.

— Bruce Feldman (@BFeldmanCBS) January 29, 2013

The ESPN article similarly emphasizes Jerry Rice’s superior numbers:

[Moss] has 982 catches for 15,292 yards and 156 touchdowns in his 14-season career.

Hall of Famer Jerry Rice, who now is an ESPN NFL analyst, leads the all-time lists in those three categories with 1,549 receptions, 22,895 yards and 197 touchdown receptions.

Elsewhere, they do note that Jerry Rice played 20 seasons.

Mike Sando has some analysis and a round-up of analyst and fan reactions, including several similar points under heading “The Stats”, and this slightly snarky caption:

Randy Moss says he’s the greatest WR of all time. @JerryRice: “Put my numbers up against his numbers.” We did –>

So when I first saw this story, I kind of laughed it off (generally I’m against claims of greatness that don’t come with 150-page proofs), but then I saw what Randy Moss actually said:

“I don’t really live on numbers, I really live on impact and what you’re able to do out on the field,” he said Tuesday. “I really think I’m the greatest receiver to ever play this game.”

From this, I think the only logical conclusion is that Randy Moss clearly reads this blog.

As any of my ultra-long-time readers know, I’ve written about Randy Moss before. “Quantum Randy Moss—An Introduction to Entanglement” was one of my earliest posts (and probably my first ever to be read by anyone other than friends and family).

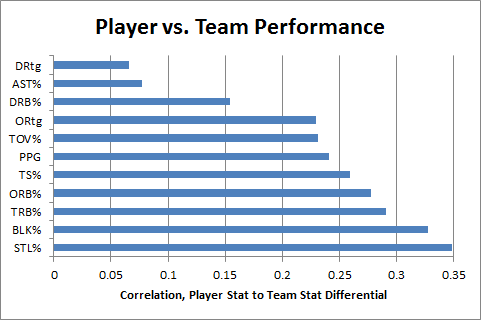

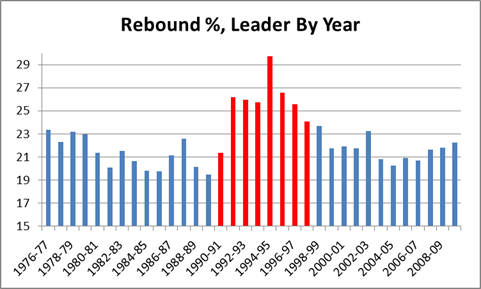

Cliff’s Notes version: I think Moss is right that yards and touchdowns and other production “numbers” don’t matter as much as “impact”, or what a player’s actual affect is on his team’s ability to move the ball, score points, and ultimately win games. Unfortunately, isolating a player’s “true value” can be virtually impossible in the NFL, since everyone’s stats are highly “entangled.” However, Randy Moss may come the closest to having a robust data set that’s actually on point, since, for a variety of reasons, he has played with a LOT of different quarterbacks. When I wrote that article, it was clear that all of them played *much* better with Moss than without him.

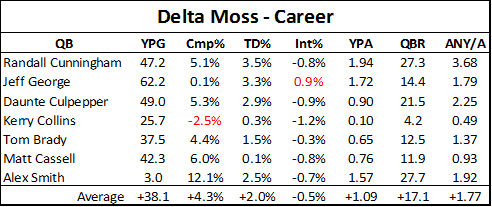

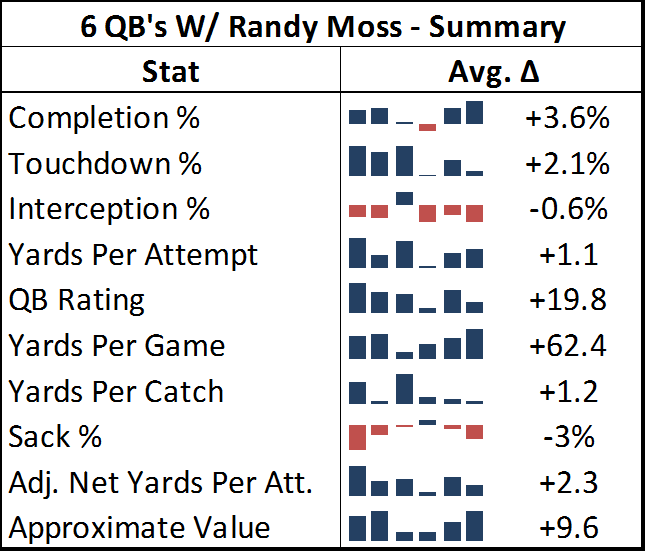

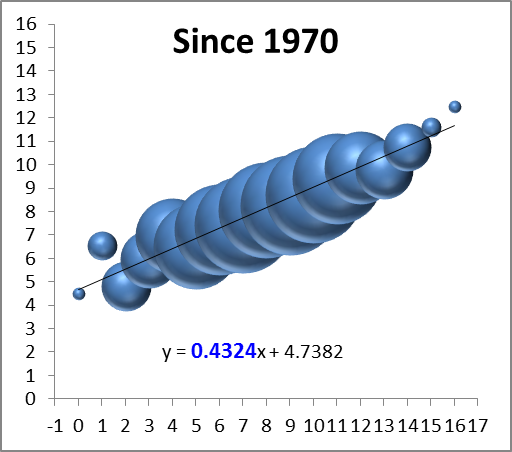

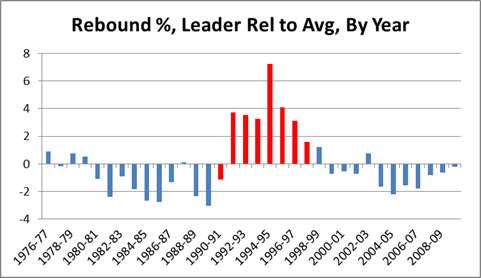

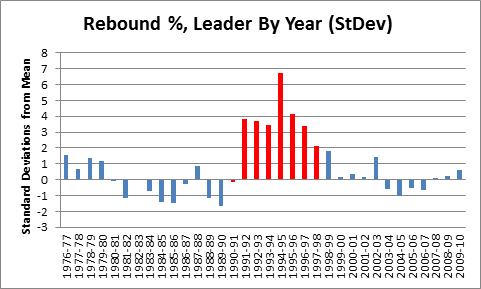

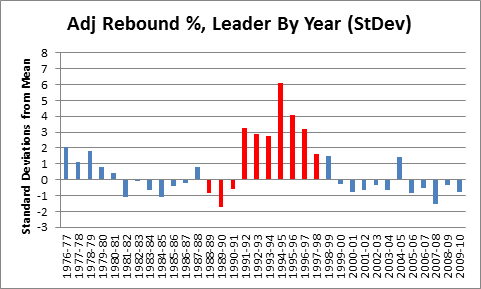

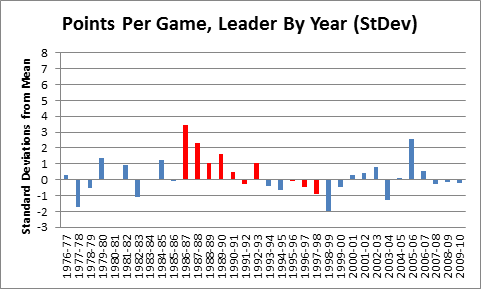

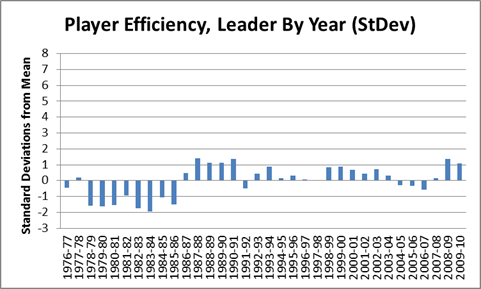

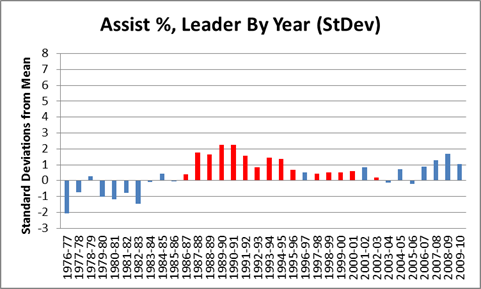

Given this latest “controversy,” I thought I’d take a quick chance to update my old data. After all, Tom Brady and Matt Cassell have played some more seasons since I did my original analysis. Also, while it may or may not be relevant given Moss’s more limited role and lower statistical production, Alex Smith now actually qualifies under my original criteria (playing at least 9 games with Randy Moss in a single season). So, for what it’s worth, I’ve included him as well. Here’s the updated comparison of seasons with Randy Moss vs. career without him (for more details, read the original article):

Note: I calculated these numbers a tiny bit differently than before. specifically, I cut out all performance stats from seasons in which a QB didn’t play at least 4 games.

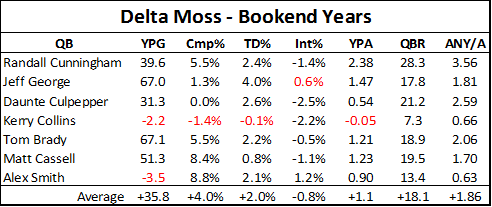

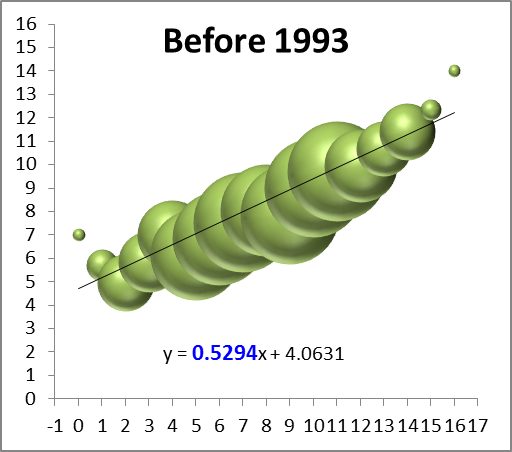

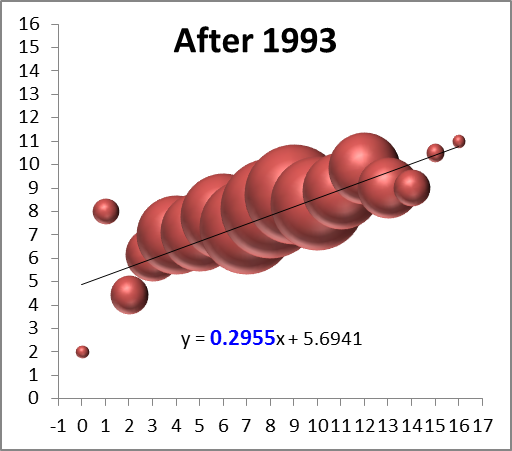

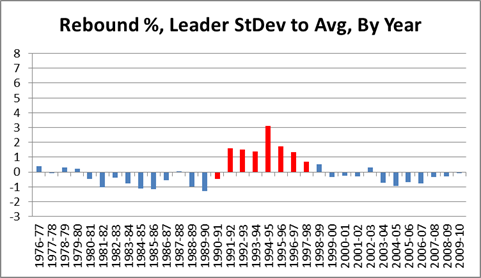

Of course, Alex Smith had a much better season last year than he has previously in his career, so that got me thinking it might be worth trying to make a slightly more apples-to-apples comparison for all 7 quarterbacks. So I filtered the data to compare seasons with Randy Moss only against “Bookend” seasons—that is, each quarterback’s seasons immediately before or after playing with Moss (if applicable):

Here we can see a little bit more variability, as we would expect considering the smaller sample of seasons for comparison, but the bottom line is unchanged. On average, the “Moss effect” even appears to be slightly larger overall. Adjusted Net Yards Per Attempt is probably the best single metric for measuring QB/passing game efficiency, and a difference of 1.77 is about what separates QB’s like Aaron Rodgers from Shaun Hill (7.54 v. 5.68), or a Peyton Manning from a Gus Frerotte (7.11 v. 5.27).

This magnitude of difference is down slightly from the calculations I did in 2010. This is partly because of a change in method (see “note” above), but (in fairness), also partly because Tom Brady’s “non Moss” numbers have improved a bit in the last couple of seasons. On the other hand, the samples are also larger, which makes the unambiguous end result a bit more reliable.

Even Smith clearly still had better statistics this season with Moss (not to mention Colin Kaepearnick seems to be doing OK as well). Whether that improvement is due to Moss (or more likely, the fear of Moss), who knows. For any particular case(s), there may be/probably are other factors at play: By no means am I saying these are all fair comparisons. But better results in this type of comparison are more likely to occur the better the player actually was. Thus, as a Bayesian matter, extreme results like these make it likely that Randy Moss was extremely good.

So does this mean I think Moss is right? Really, I have no idea. “Greatness” is a subjective term, and Rice clearly had a longer and more fruitful (3 Super Bowl rings) career. But for actual “impact” on the game: If I were a betting man (and I am), I’d say that the quality and strength of evidence in Moss’s favor makes him the most likely “best ever” candidate.

[1/31 Edit: Made some minor clarifying changes throughout.]